Bot·XD

What would a delivery from a humanoid robot look like? How can we design robots that are approachable, safe and sustainable? How might a manager navigate tasks for robots supporting the warehouse floor? In BOTXD, I explore the robot experience from the human experience perspective.

Delivery XD

It's 11:02 am 2025, a delivery bot is arriving with those urgent parcels you've been waiting for. How will that experience look?

A long display cascades over the bot's front surface, providing a large canvas for branding and communications. Along the commute, "Delivery in progress" is displayed to inform people nearby.

You open the front door to a compact humanoid presenting your parcels. The branding on the front display slides back to reveal your unique delivery code.

Scan the provided QR code, and sign to have the parcels handed to you.

A confirmation is displayed, and your packages are handed to you or placed nearby. Face-to-face video chat with a human is a shout or tap away at any time during the delivery experience. Thanks for shipping with us!

Hardware

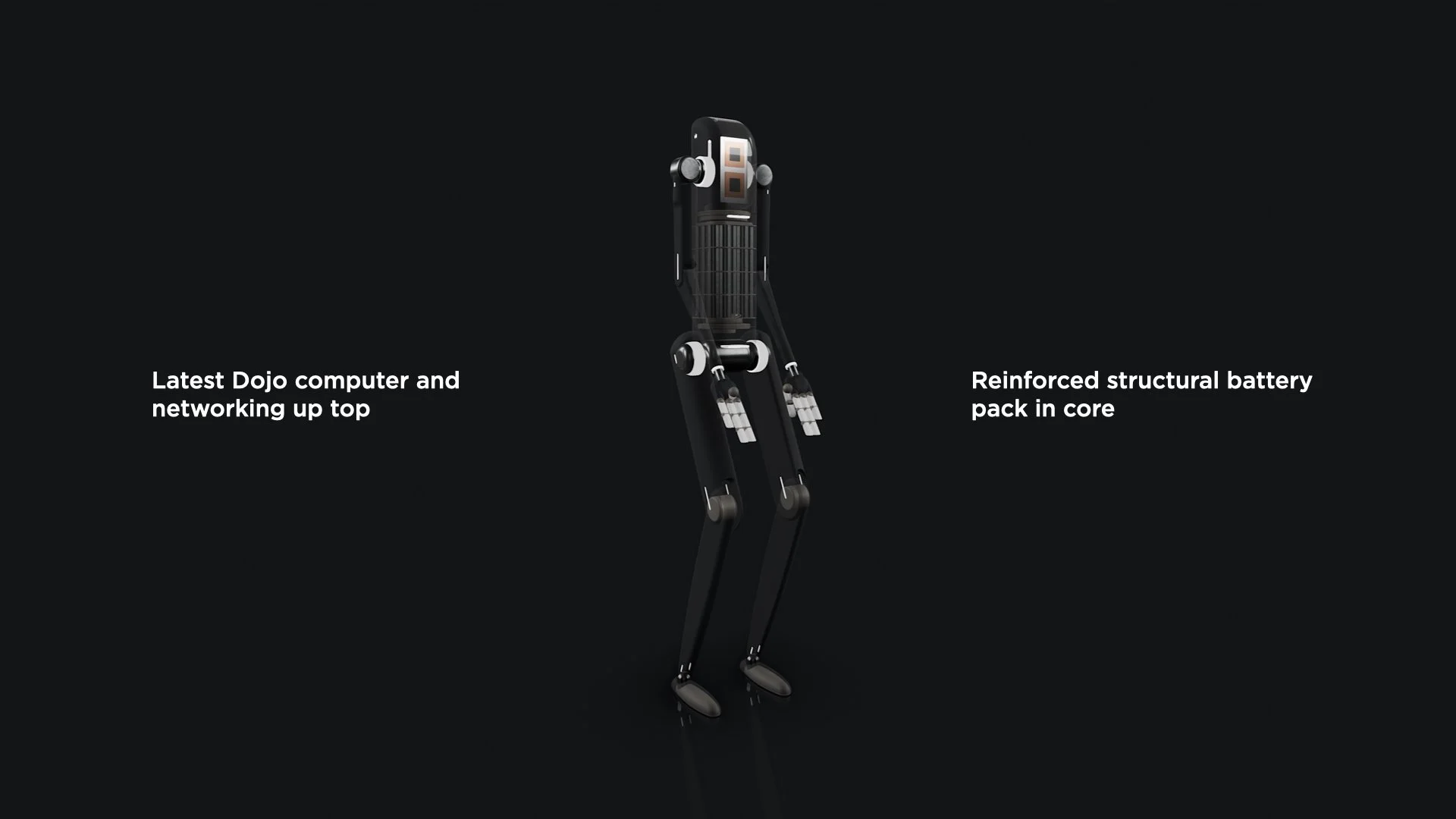

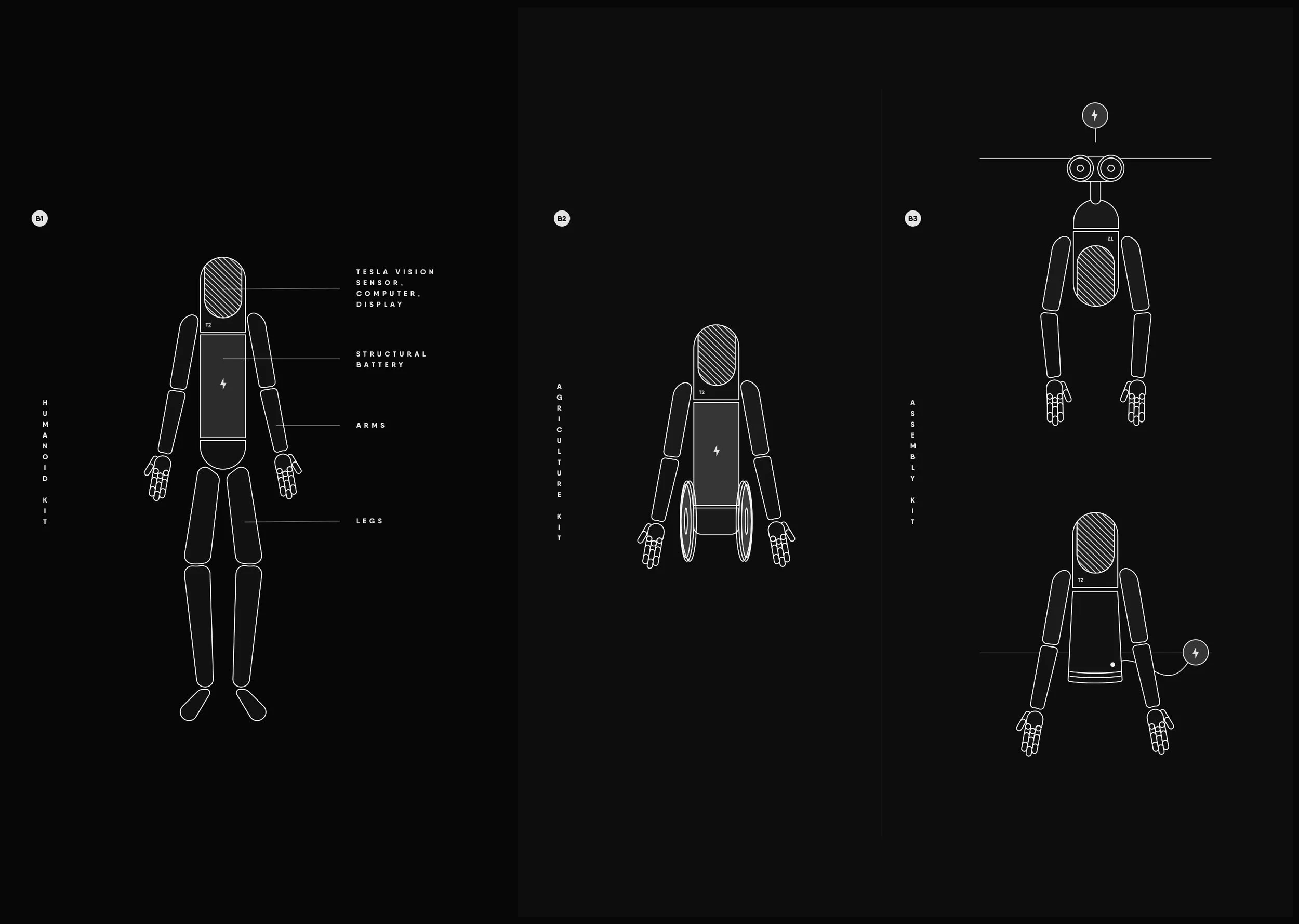

For hardware, I designed a modular, highly interactive and accessible bot.

LED strips on the bot's exterior illuminate moving parts and ensure visibility in low-light environments. Variable colours and brightness are used for alerts and communication.

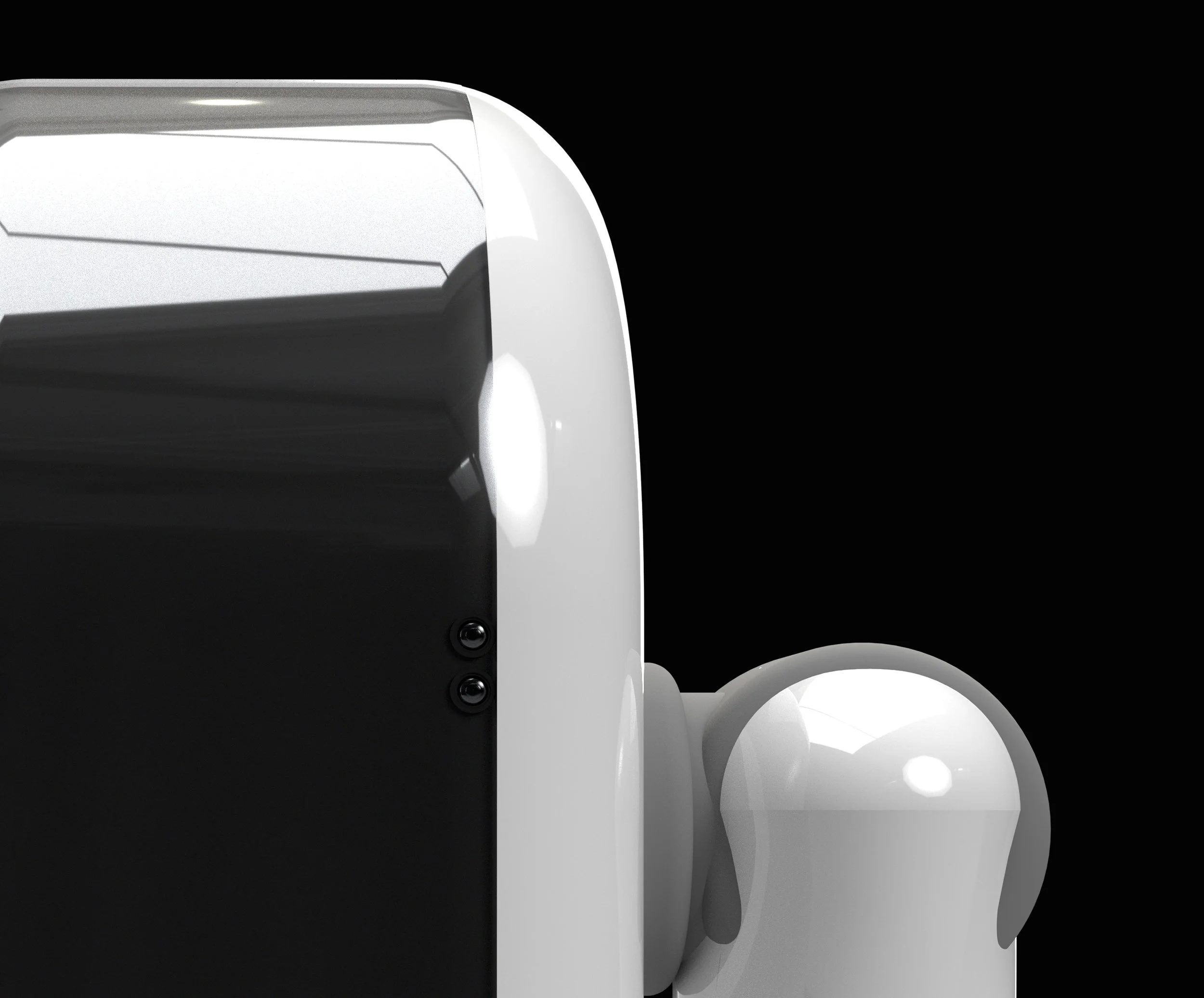

What about the face? In Sci-fi, most humanoids are complete with shoulders, neck and face, but many industry experts promote the opposite approach. I designed the Waterfall display with a long, curved design for maximum flexibility and user interaction. The display provides ample space for branding and communication while confidently reflecting a tool, not a human.

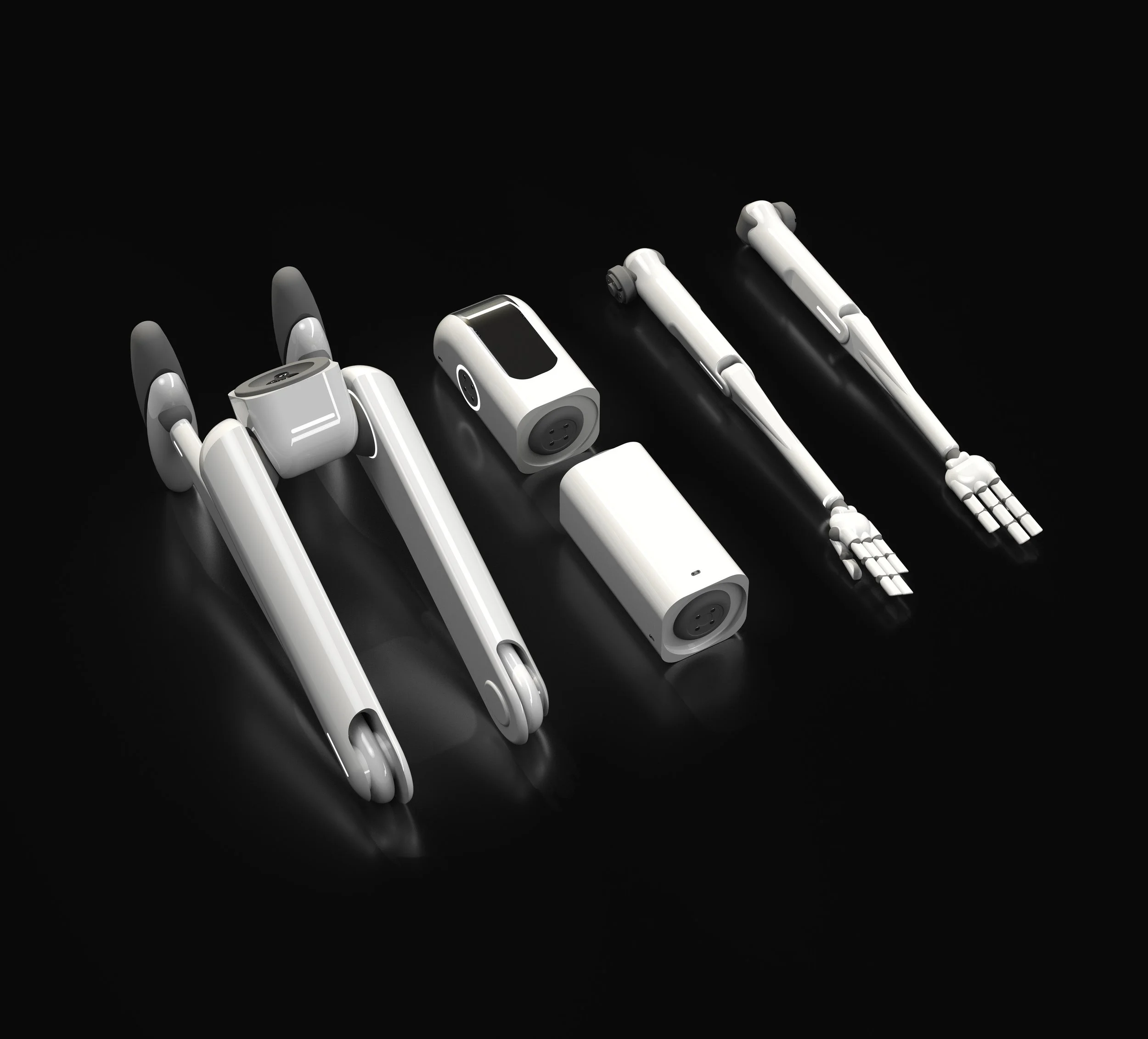

A modular design means the bot can fit different needs at different times. Detachable arms, legs, top, and core make shipping more accessible and sustainable while enabling customers to purchase only the necessary parts. Additionally, the legs and arms fold to save even more space (storage space on Starship is limited).

"I prefer no face; this is an artificially intelligent machine, not human. "

Maja Pantic - Professor of affective & behavioural computing

The base of a modular robot would likely be a set of arms and a brain. Aside from that, each use case might call for legs, wheels or other mobility variations. For example, a bot that hangs might not need a battery core as it would move around a kitchen on a track with consistent power.

When you open the companion app, you land in a simulated environment surrounded by highlighted actionable items. On the left is a panel where you can browse and refine bot tasks.

Start a task

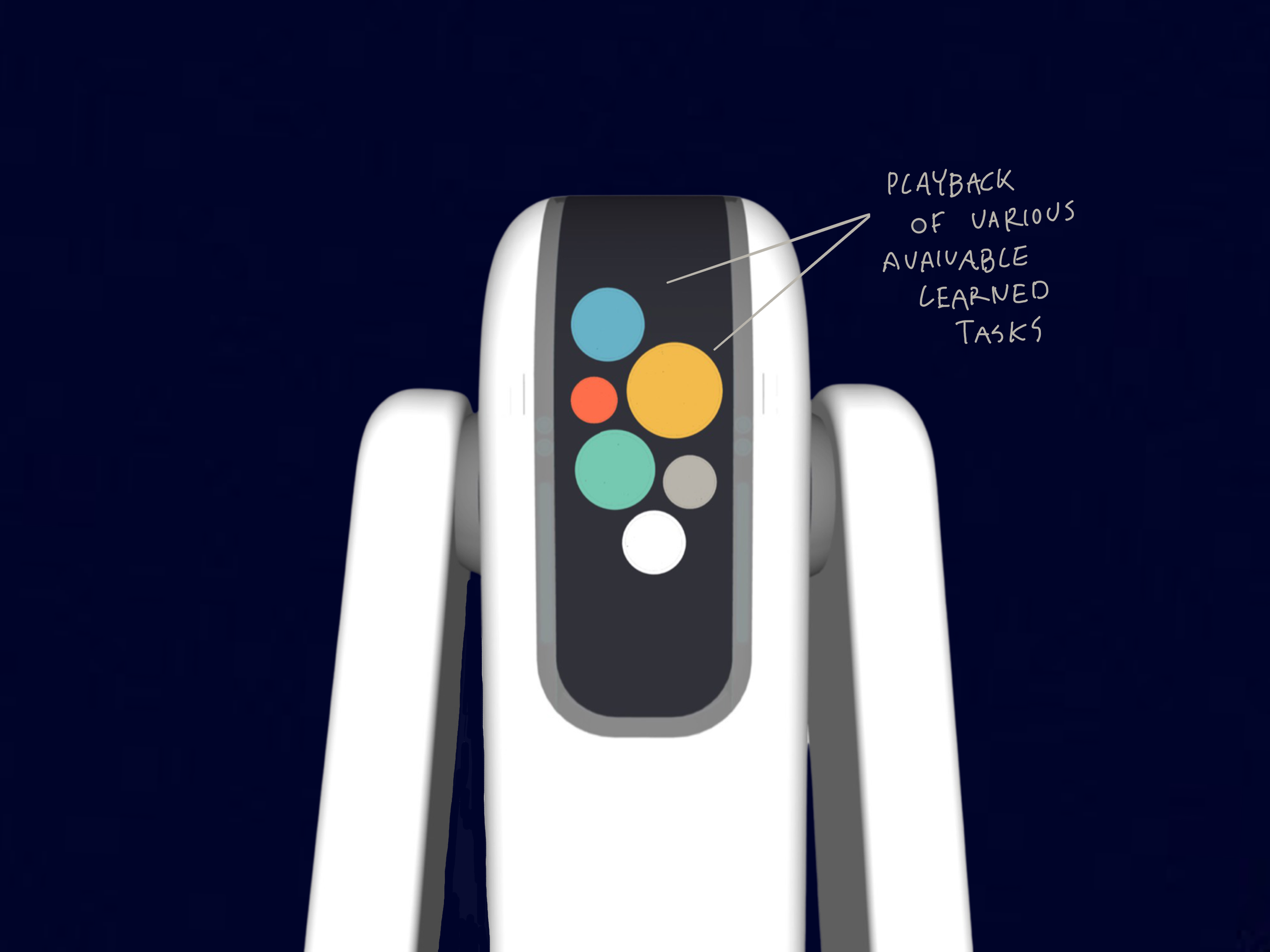

Each task is presented as a card with a preview and tags to represent the building blocks of each task. Blocks are added as the AI learns new things, and combined into tasks like these, similar to putting together a playlist.

Users can tap to refine block values in the moment before starting a task.

Additional context is provided to help refine your selection like object identifiers, energy usage and estimated duration.

All set! The bot will begin the task and send you a notification once it’s complete. You can line up other tasks to begin once this task is done.

Early scraps